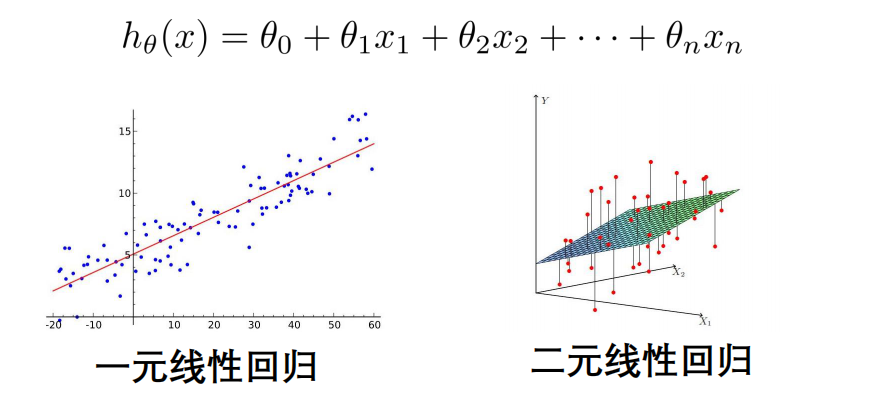

线性回归与非线性回归

基于梯度下降法的一元线性回归应用

回归Regression

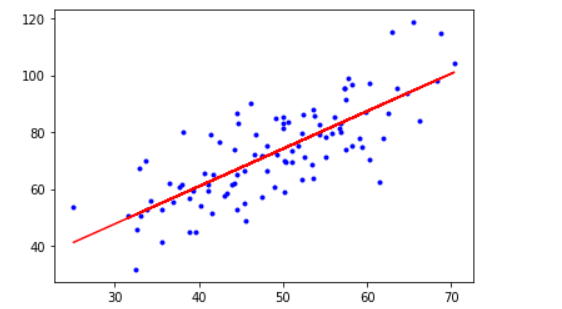

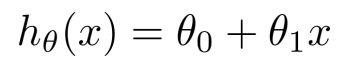

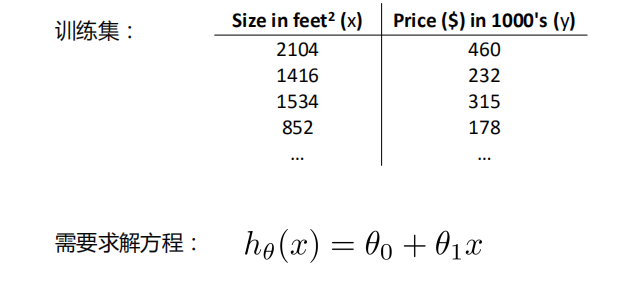

一元线性回归

- 回归分析(regression analysis)用来建立方程模拟两个或者多个变量之间如何关联

- 被预测的变量叫做:因变量(dependent variable), 输出(output)

- 被用来进行预测的变量叫做: 自变量(independent variable), 输入(input)

- 一元线性回归包含一个自变量和一个因变量

- 以上两个变量的关系用一条直线来模拟

- 如果包含两个以上的自变量,则称作多元回归分析(multiple regression)

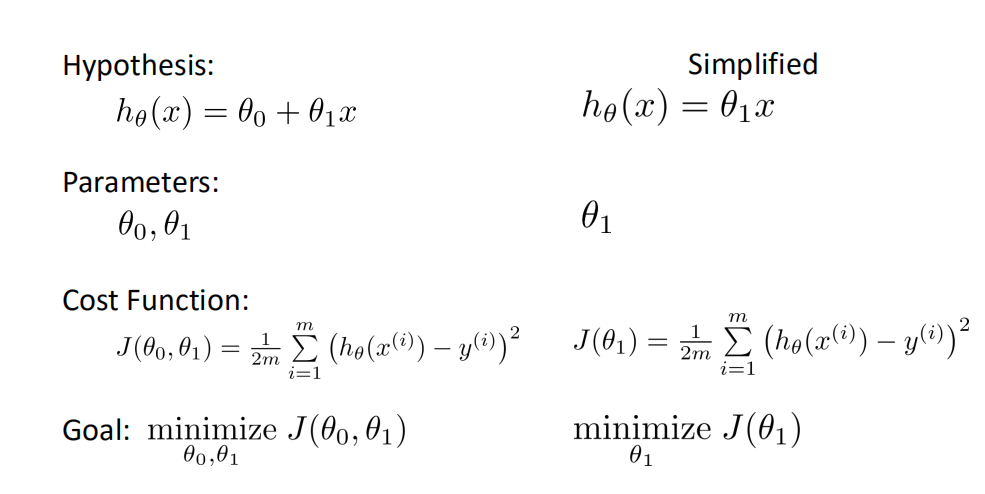

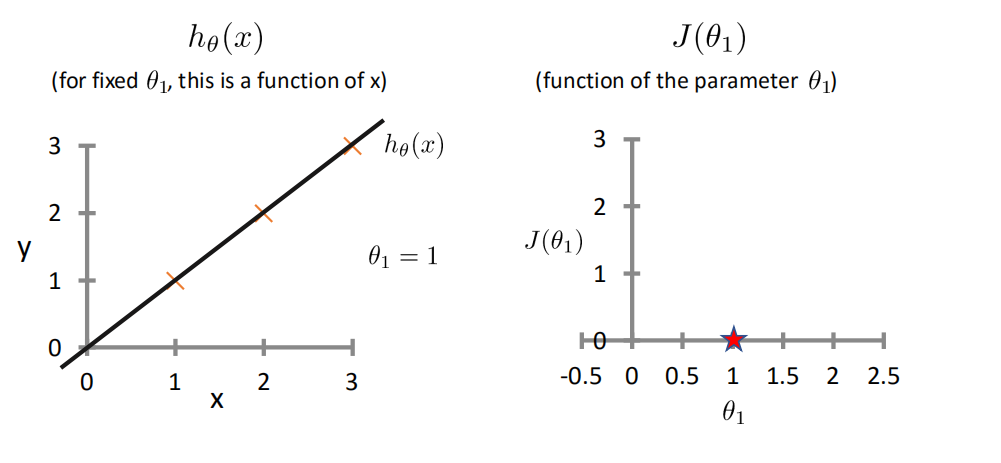

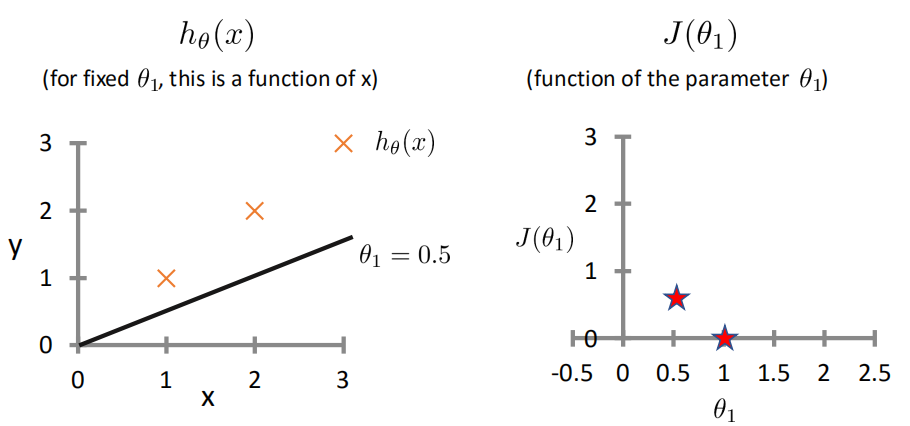

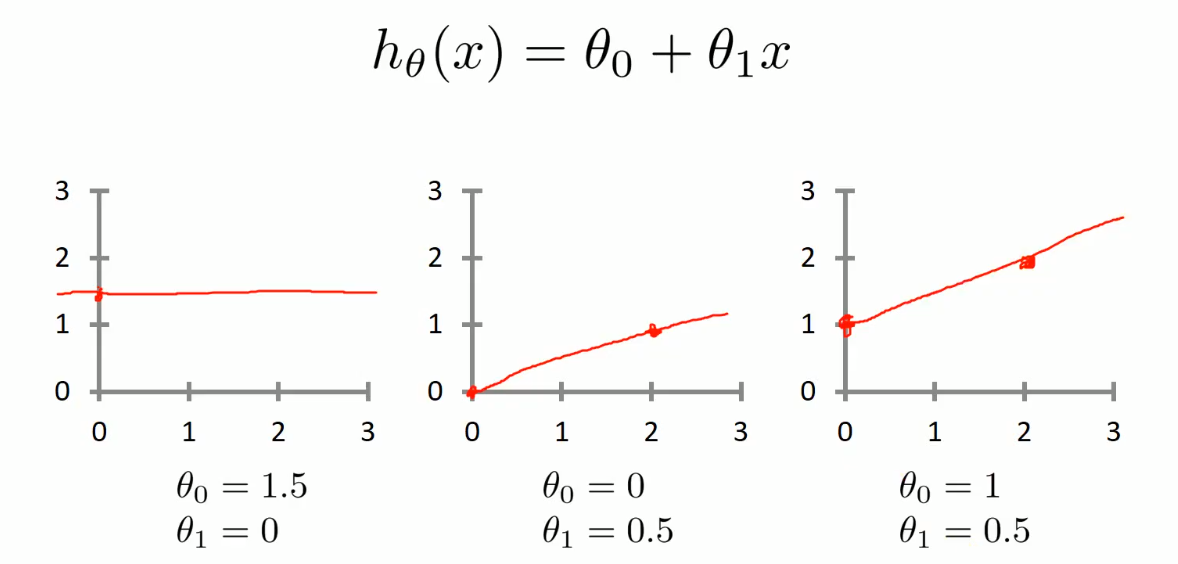

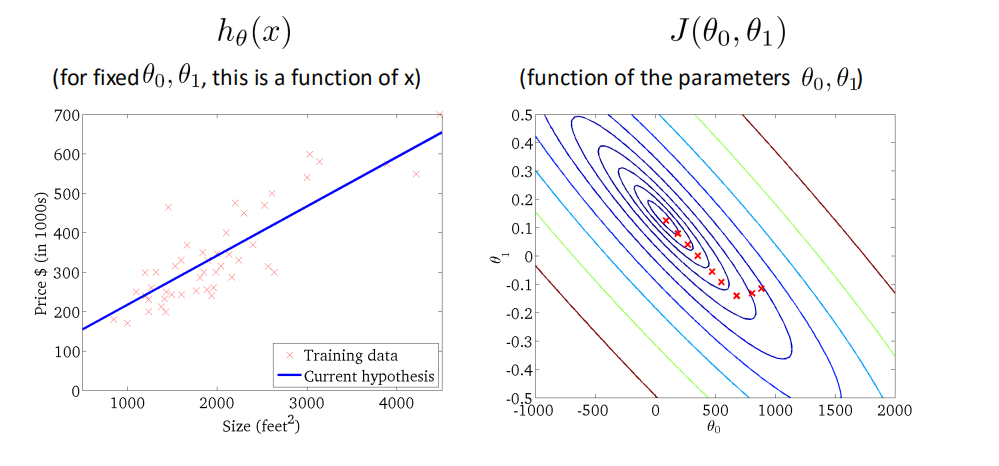

ℎ𝜃 𝑥 = 𝜃0 + 𝜃1𝑥

这个方程对应的图像是一条直线,称作回归线。其中,𝜃1为回归线的斜率, 𝜃0为回归线的截距。

- 线性回归的三种关系:

- 正相关

- 负相关

- 不相关

求解方程系数

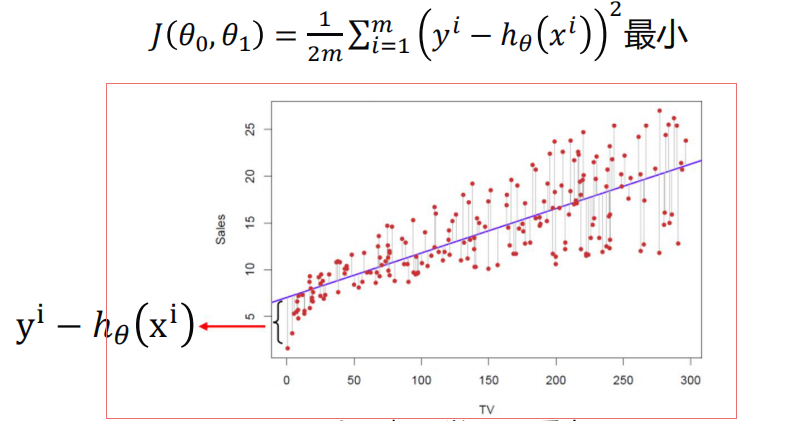

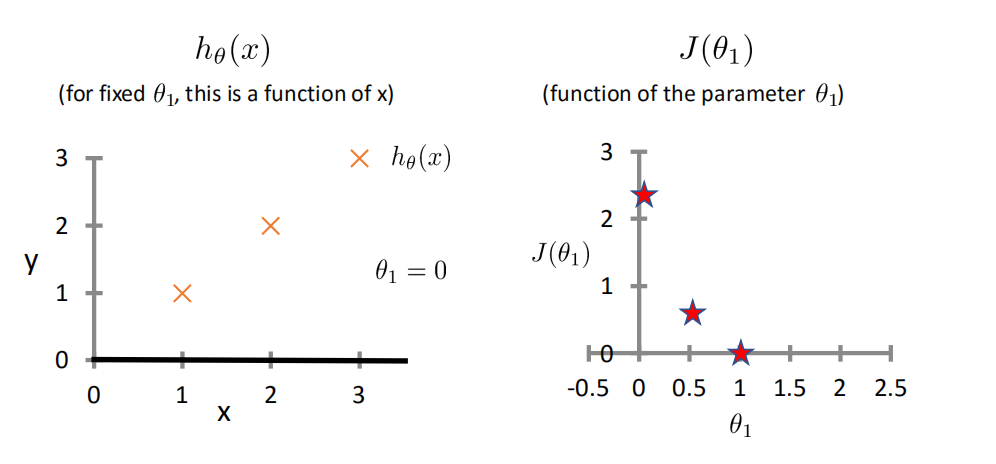

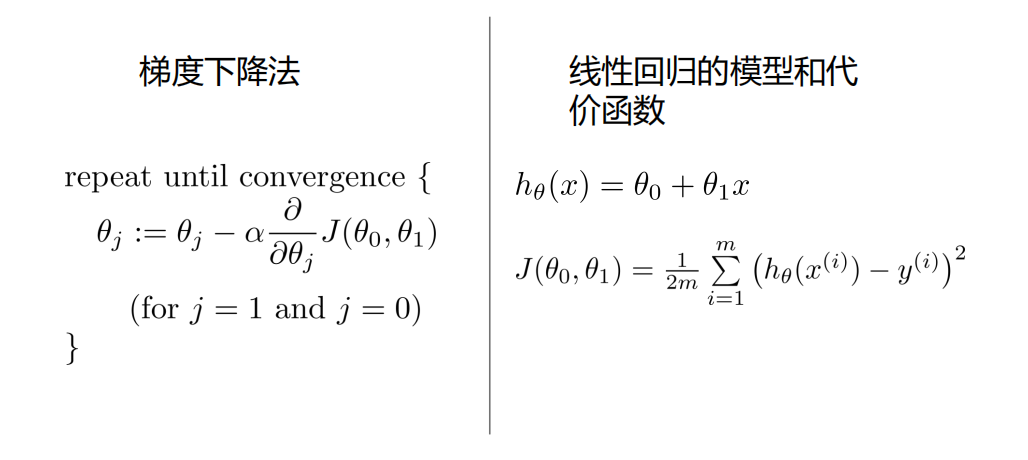

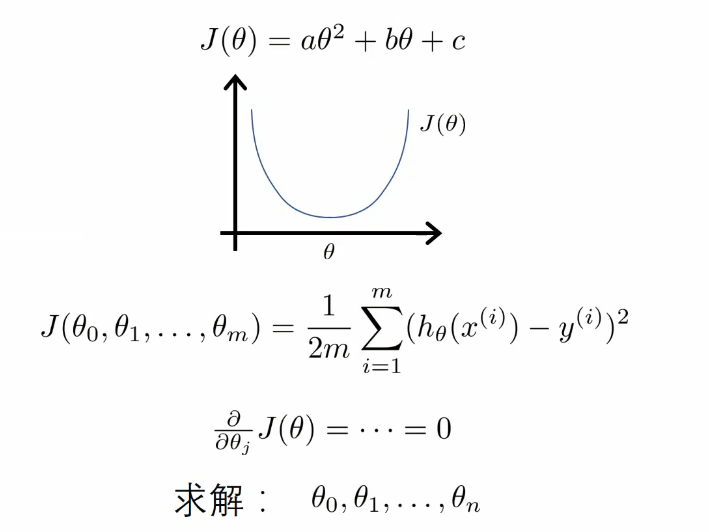

代价函数Cost Function

-

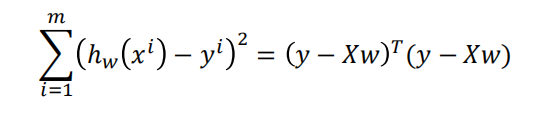

最小二乘法

-

真实值y,预测值ℎ𝜃 𝑥 ,则误差平方为(y − ℎ_𝜃(x))^2

-

找到合适的参数,使得误差平方和:

-

-

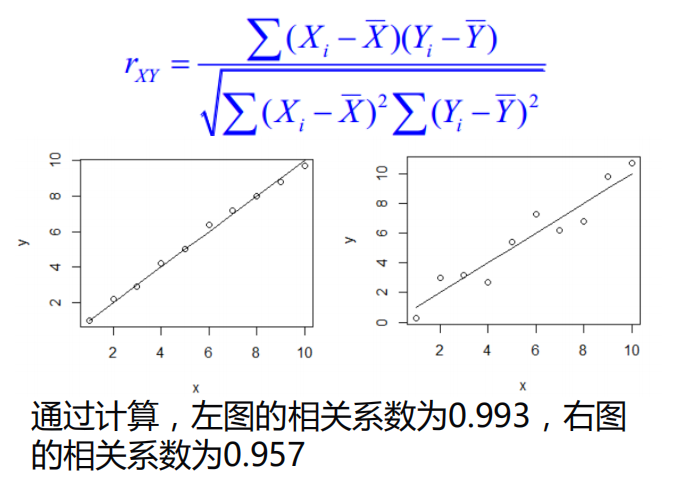

相关系数

使用相关系数去衡量线性相关性的强弱

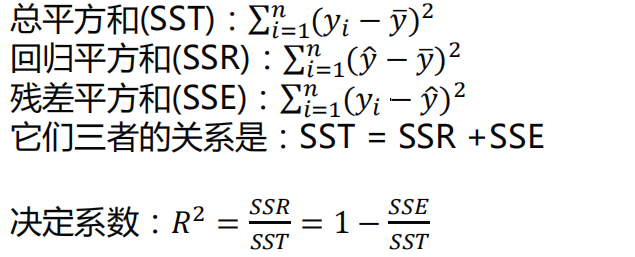

决定系数

相关系数𝑅2(coefficient of determination)是用来描述两个变量之间的线性关系的,但决定系数的适用范围更广,可以用于描述非线性或者有两个及两个以上自变量的相关关系。它可以用来评价模型的效果

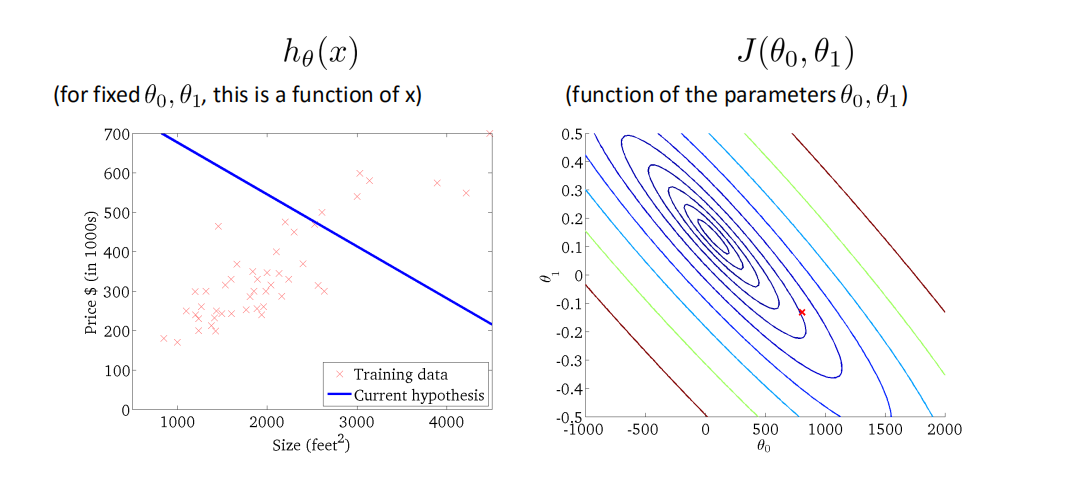

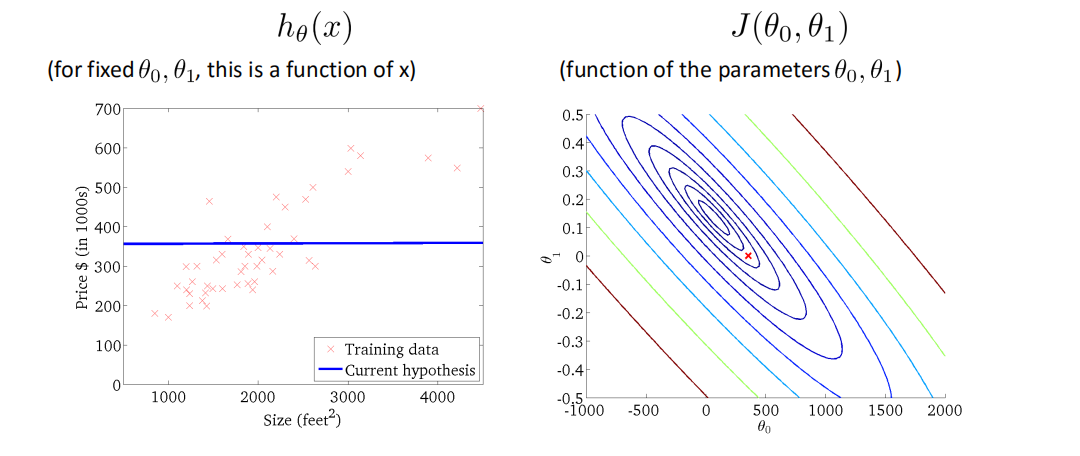

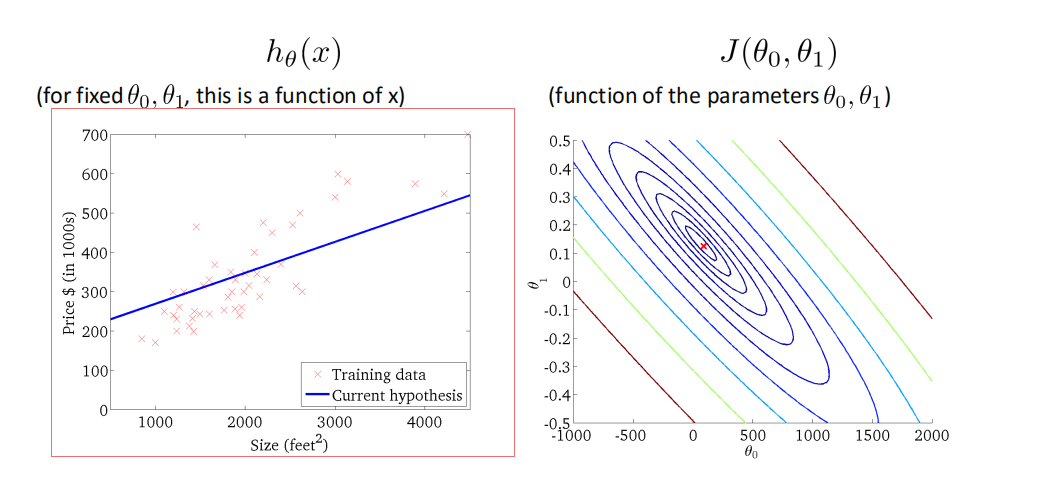

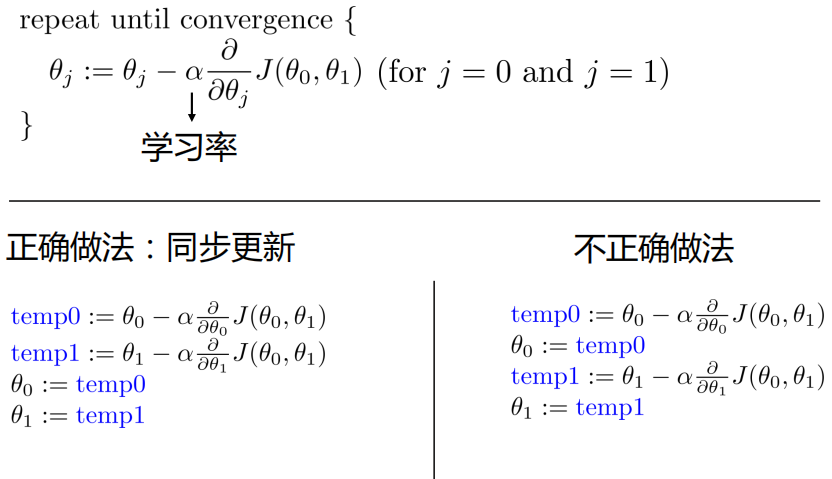

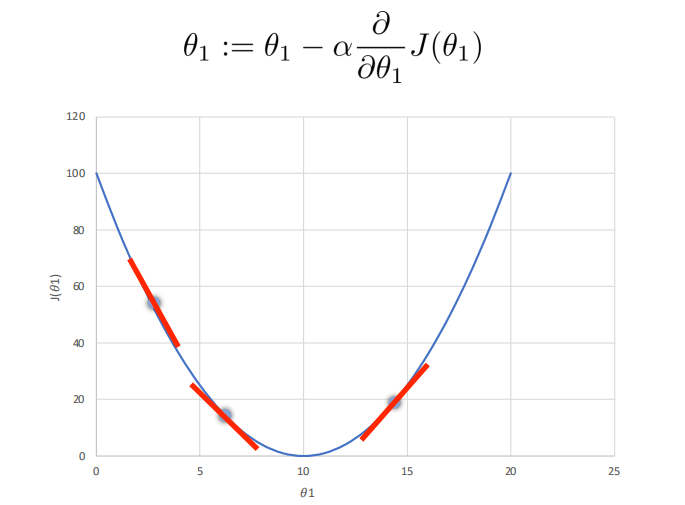

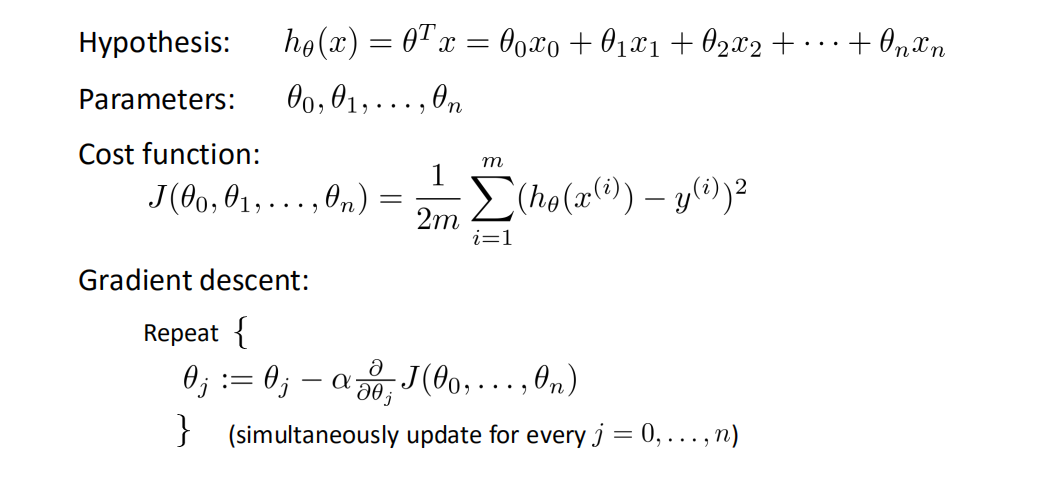

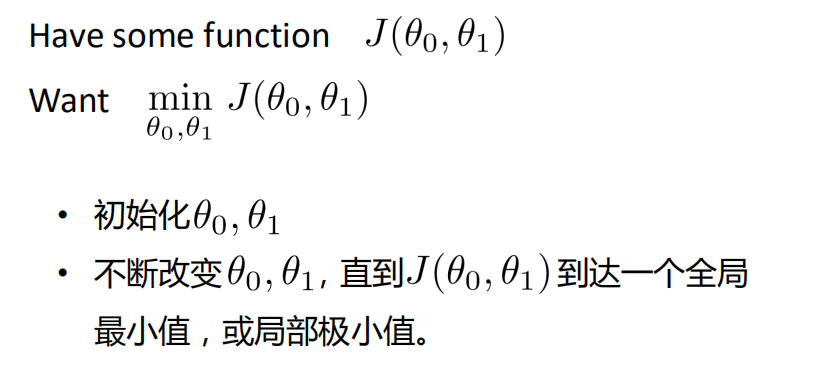

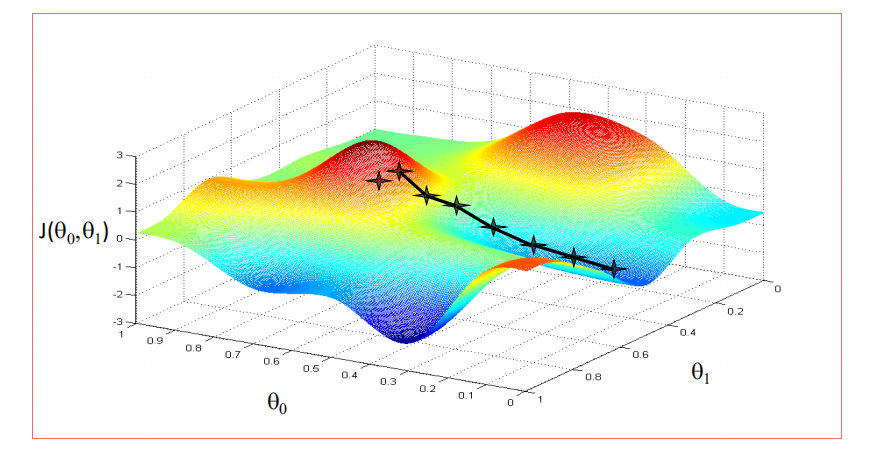

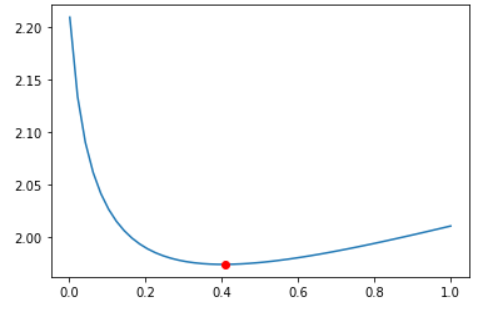

梯度下降法Gradient Descent

-

学习率不能太小,也不能太大,可以多尝试一些值0.1,0.03,0.01,0.003,0.001,0.0003,0.0001…,否则有可能会陷入局部极小值

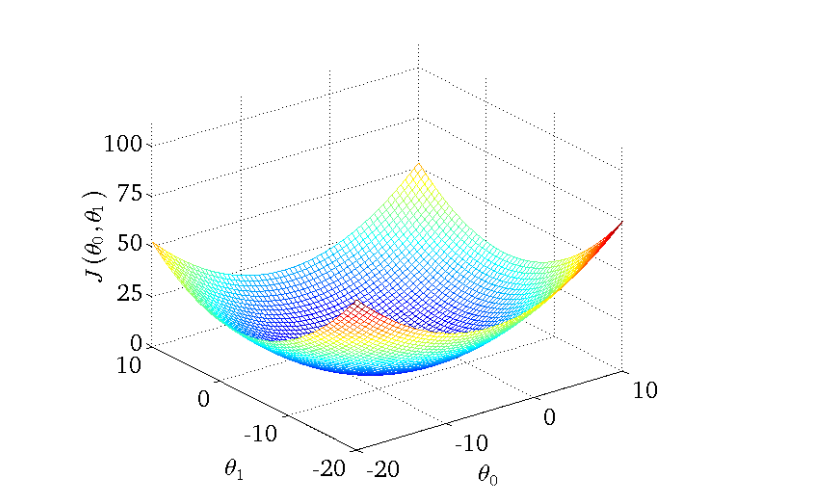

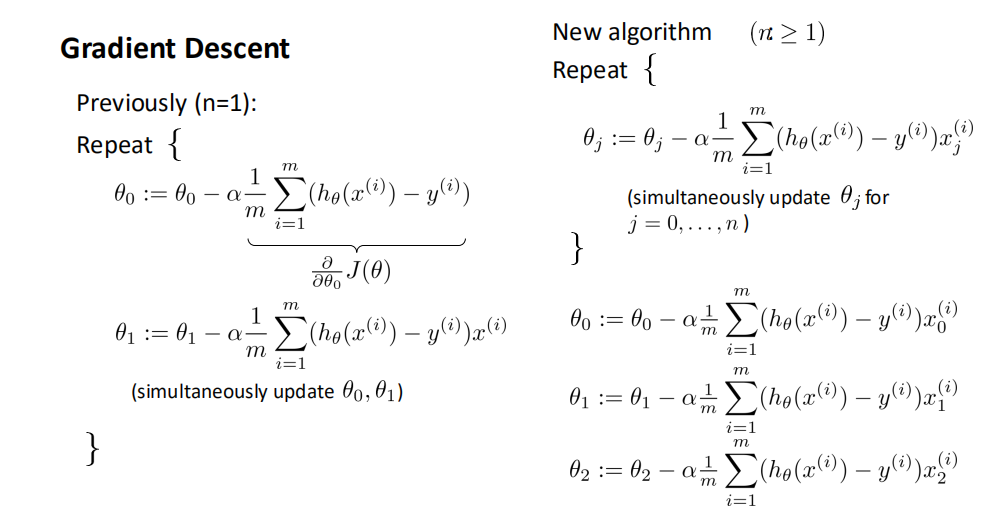

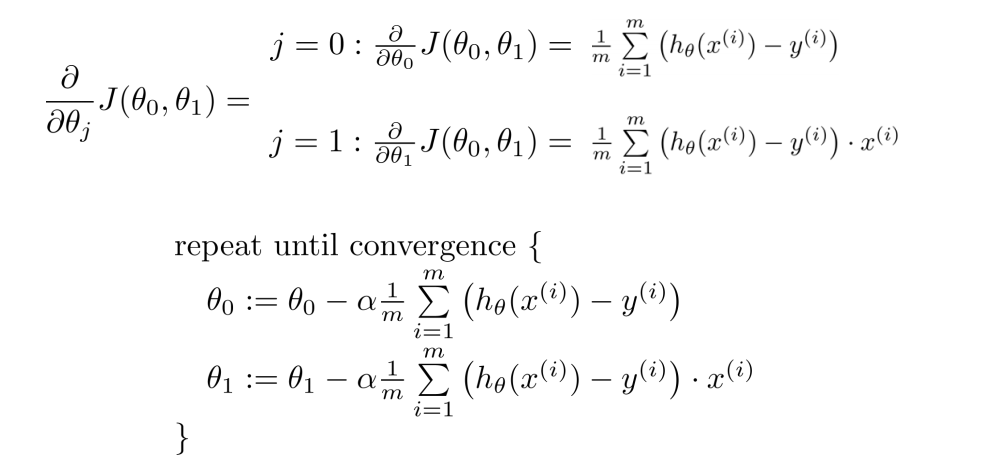

用梯度下降法来求解线性回归

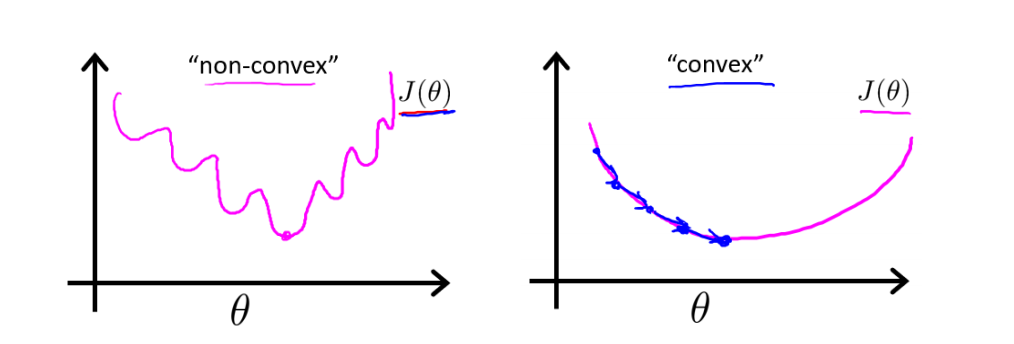

非凸函数和凸函数

-

线性回归的代价函数是凸函数

优化过程

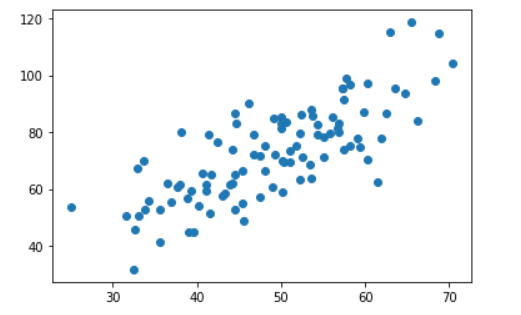

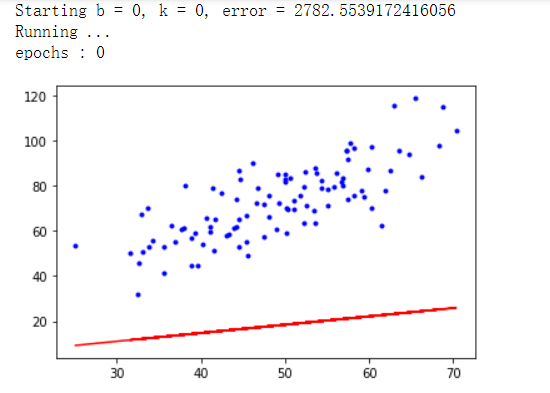

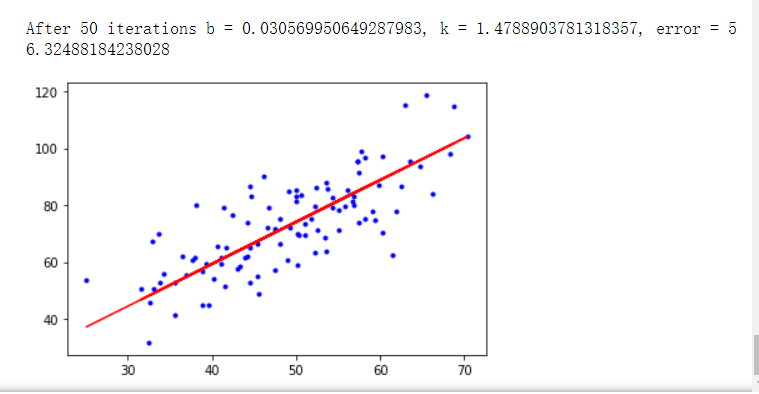

代码实现

|

|

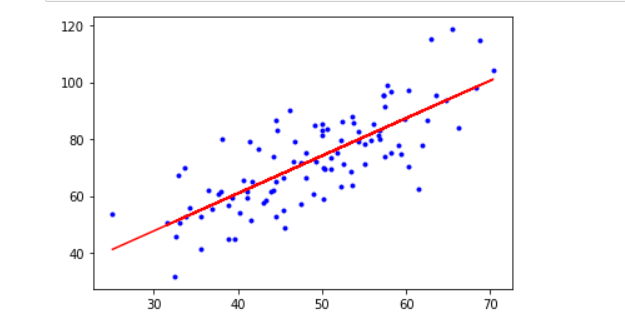

运行结果

使用sklearn实现-一元线性回归

|

|

运行结果

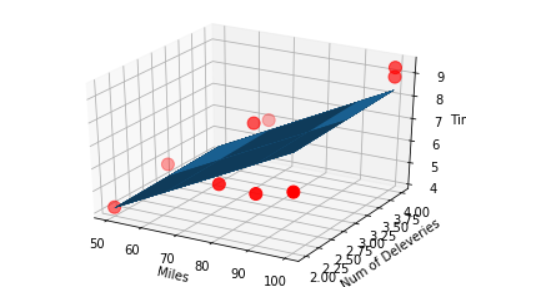

基于梯度下降法的多元线性回归应用

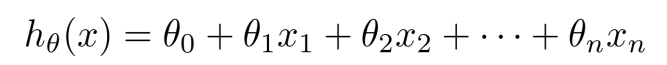

多元线性回归

-

单特征

-

多特征

多元线性回归模型

当Y值的影响因素不是唯一时,采用多元线性回归

梯度下降法-多元线性回归

代码实现

|

|

运行结果

基于sklearn实现多元线性回归

|

|

- 运行结果与上面类似,就不展示出来了

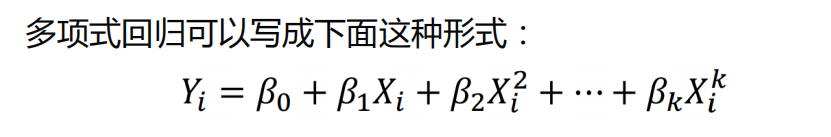

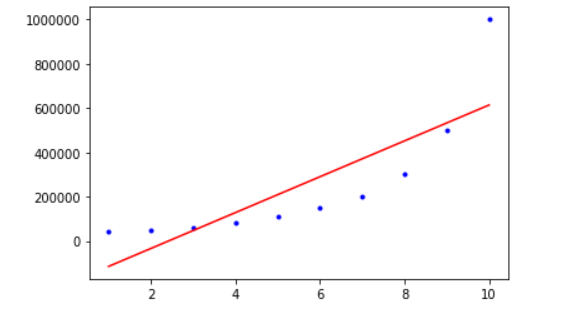

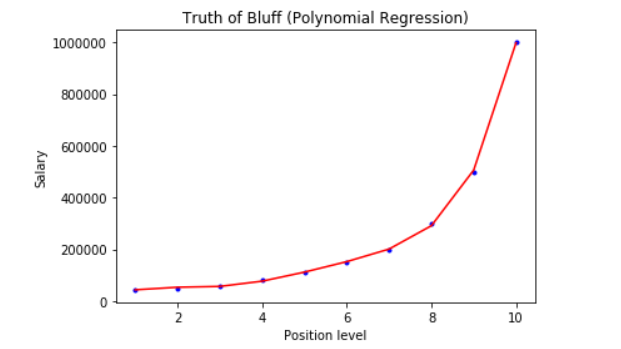

多项式回归及应用

代码实现

|

|

- 可以看出使用多元线性回归,效果并不是太好,所以我们换成多项式回归来对数据进行特征处理一下

|

|

- 这里看到通过进行多项式特征处理后,拟合结果就比较好

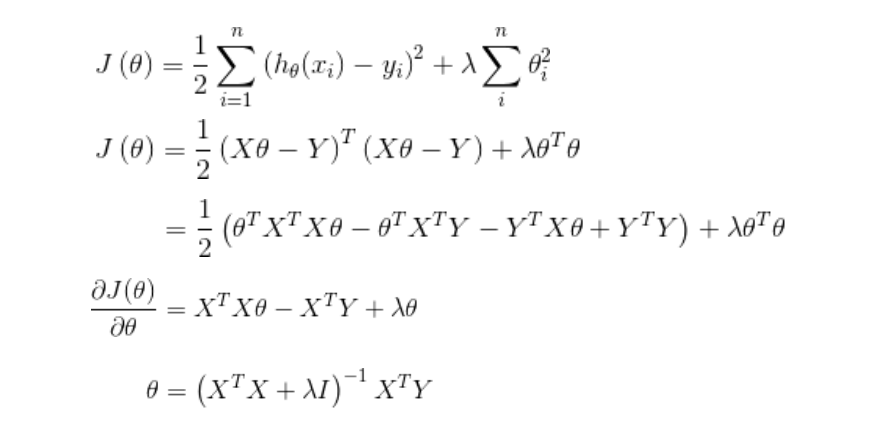

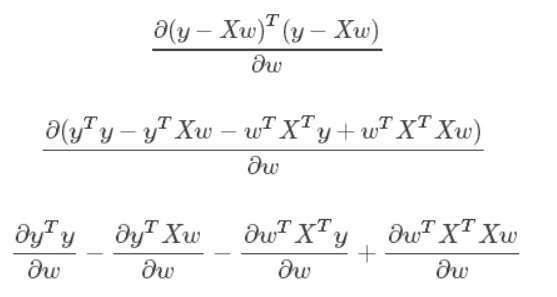

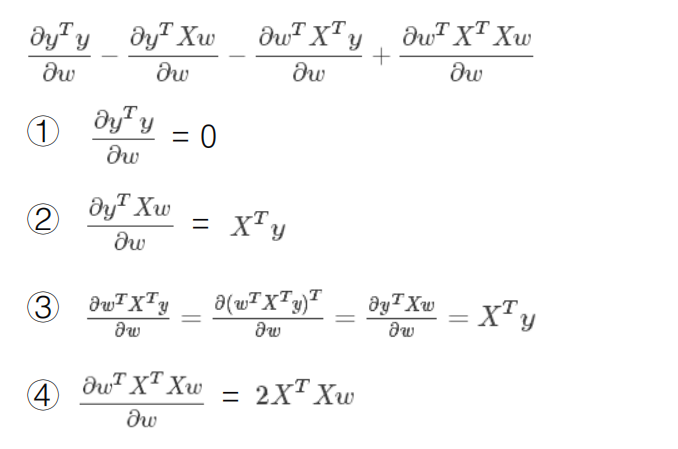

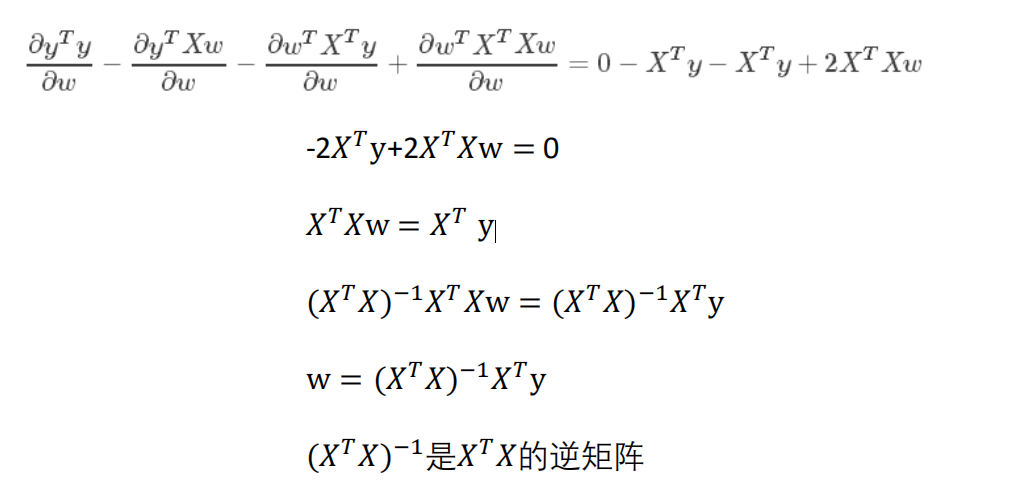

标准方程法(Normal Equation)

分子布局(Numerator-layout): 分子为列向量或者分母为行向量

分母布局(Denominator-layout):分子为行向量或者分母为列向量

求导公式:https://en.wikipedia.org/wiki/Matrix_calculus#Scalar-by-vector_identiti

矩阵不可逆的情况

- 线性相关的特征(多重共线性)

- 特征数据太多(样本数m<=特征数量n)

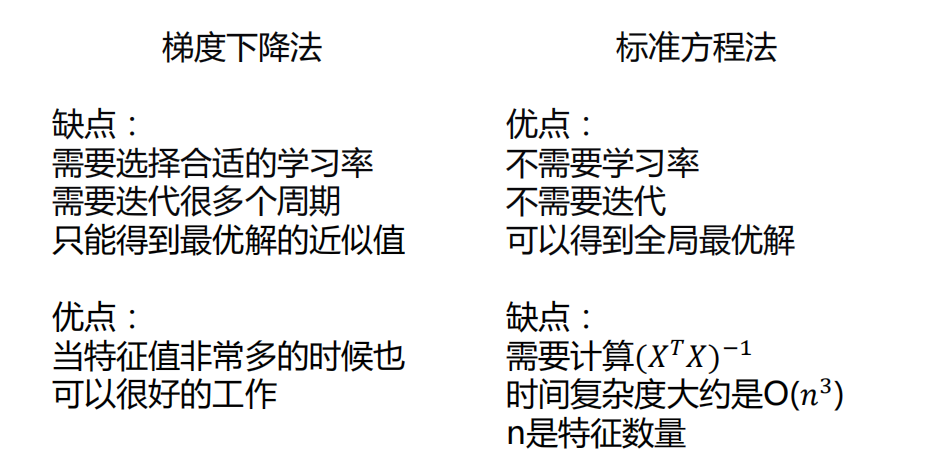

梯度下降法vs标准方程法

代码实现

|

|

运行结果

特征缩放交叉验证法

特征缩放

数据归一化:

数据归一化就是把数据的取值范围处理为0-1或者-1-1之间。

-

任意数据转化为0-1之间:newValue = (oldValue-min)/(max-min)

任意数据转化为-1-1之间:newValue = ((oldValue-min)/(max-min)-0.5)*2

均值标准化:

x为特征数据,u为数据的平均值,s为数据的方差

newValue = (oldValue-u)/s

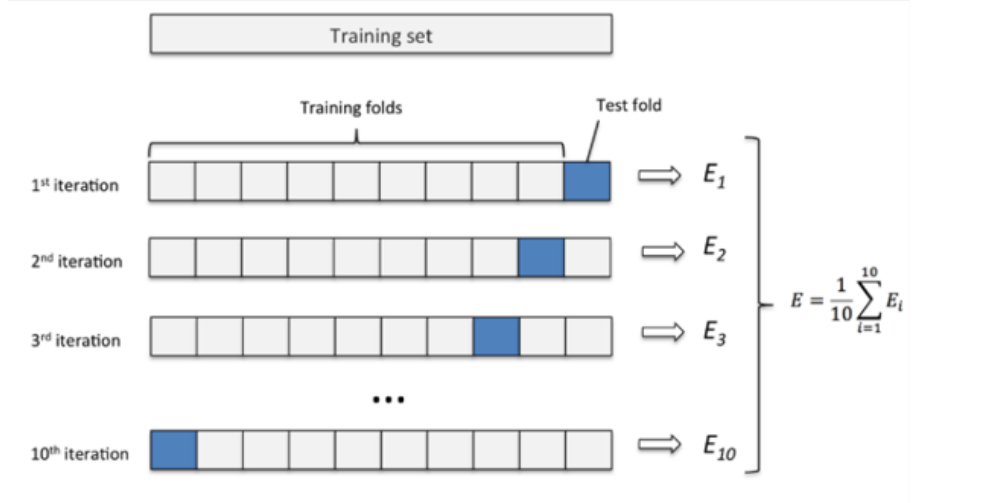

交叉验证法

- 通过切分数据,每个部分分成训练集和测试集,且将每个部分的误差加起来计算平均值即为最终误差

过拟合(Overfitting)&正则化(Regularized)

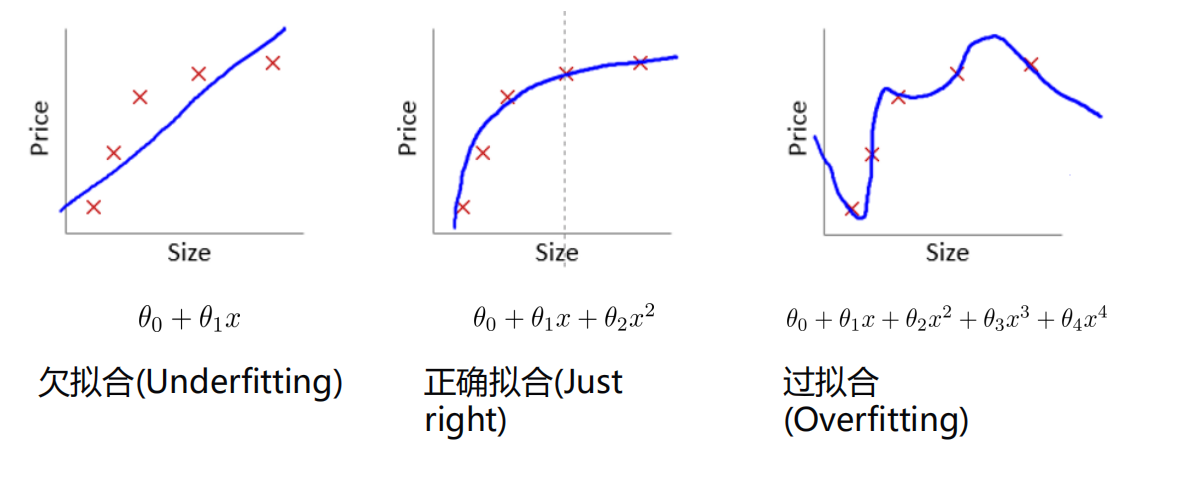

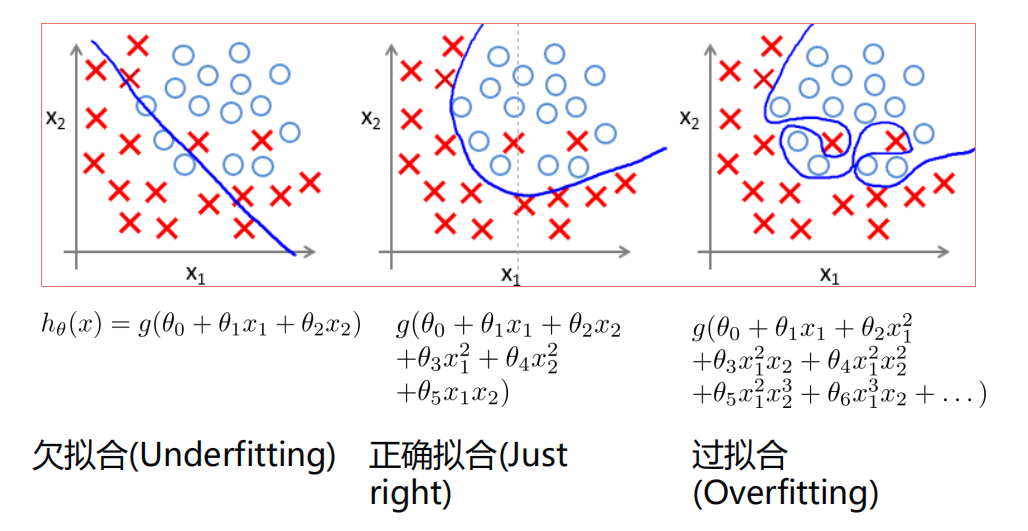

过拟合

拟合情况:

防止过拟合:

- 减少特征

- 增加数据量

- 正则化

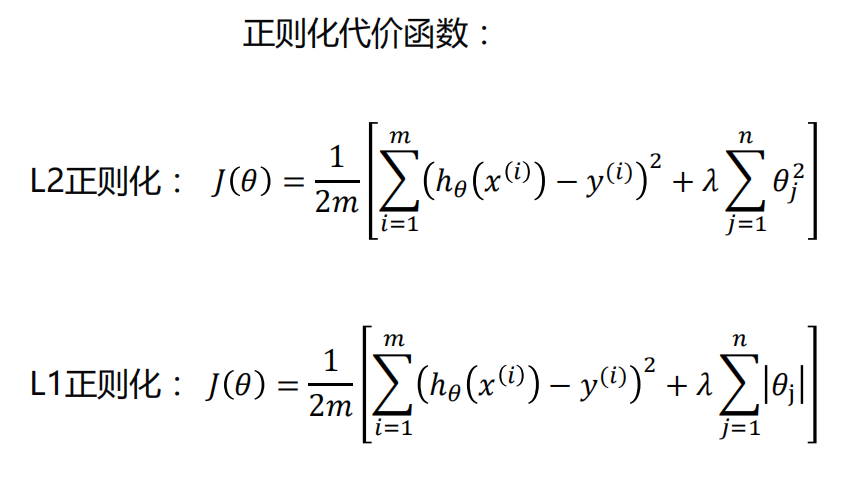

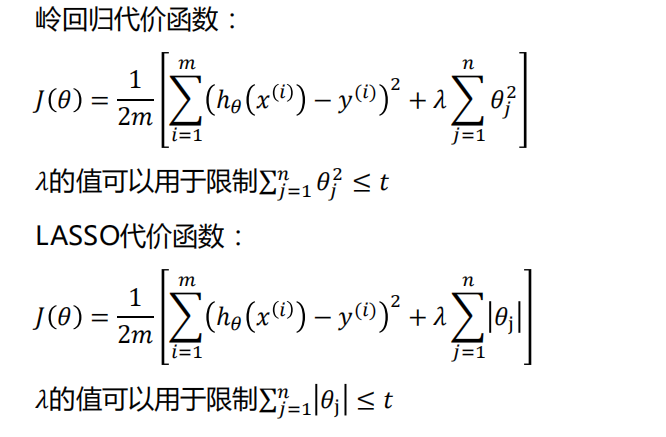

正则化

岭回归/LASSO回归/弹性网的应用

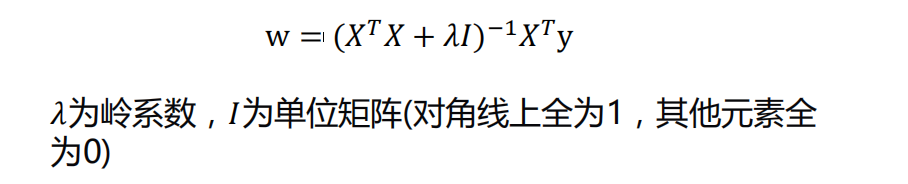

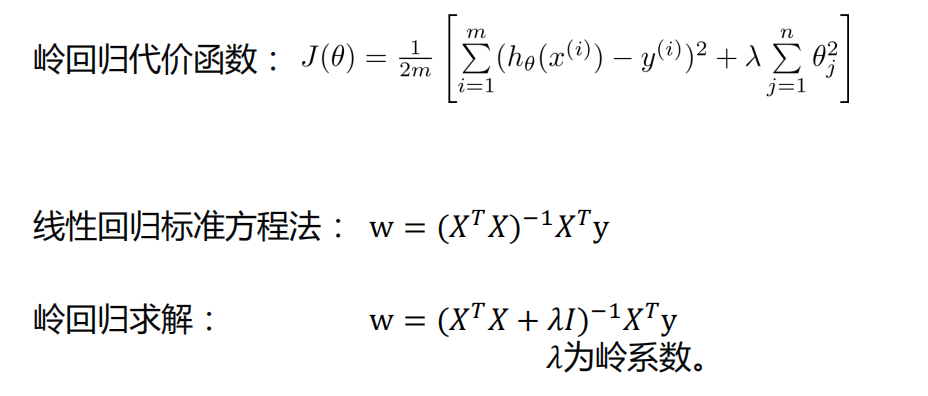

岭回归(Ridge Regression)

w = (𝑋^𝑇𝑋)^(−1)𝑋^𝑇y

- 如果数据的特征比样本点还多,数据特征n,样本个数m,如果n>m,则计算时会出错。因为(𝑋^𝑇𝑋)不是满秩矩阵,所以不可逆。

为了解决这个问题,统计学家引入了岭回归的概念。

-

-

岭回归最早是用来处理特征数多于样本的情况,现在也用于在估计中加入偏差,从而得到更好的估计。同时也可以解决多重共线性的问题。岭回归是一种有偏估计。

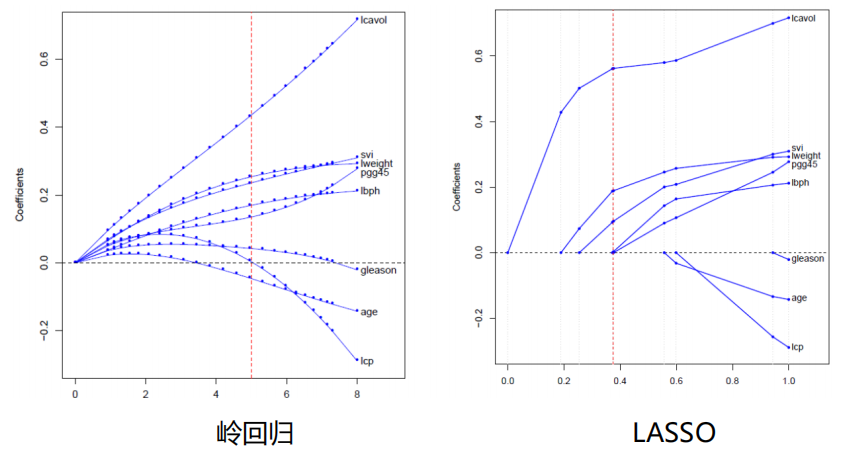

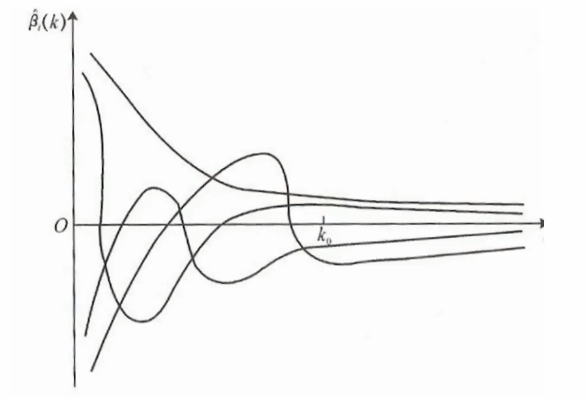

选择𝜆值,使到:

- 各回归系数的岭估计基本稳定。

- 残差平方和增大不太多

- 𝜆值为横坐标,所求参数为纵坐标

sklearn-代码实现

Longley数据集

Longley数据集来自J.W.Longley(1967)发表在JASA上的一篇论文,是强共线性的宏观经济数据,包含GNP deflator(GNP平减指数)、GNP(国民生产总值)、Unemployed(失业率)、ArmedForces(武装力量)、Population(人口)、year(年份),Emlpoyed(就业率)。

- LongLey数据集因存在严重的多重共线性问题,在早期经常用来检验各种算法或计算机的计算精度

|

|

运行结果

标准方程法-代码实现

|

|

LASSO

- Tibshirani(1996)提出了Lasso(The Least Absolute Shrinkage and Selectionator operator)算法

- 通过构造一个一阶惩罚函数获得一个精炼的模型;通过最终确定一些 指标(变量)的系数为零(岭回归估计系数等于0的机会微乎其微, 造成筛选变量困难),解释力很强。

- 擅长处理具有多重共线性的数据,与岭回归一样是有偏估计 。

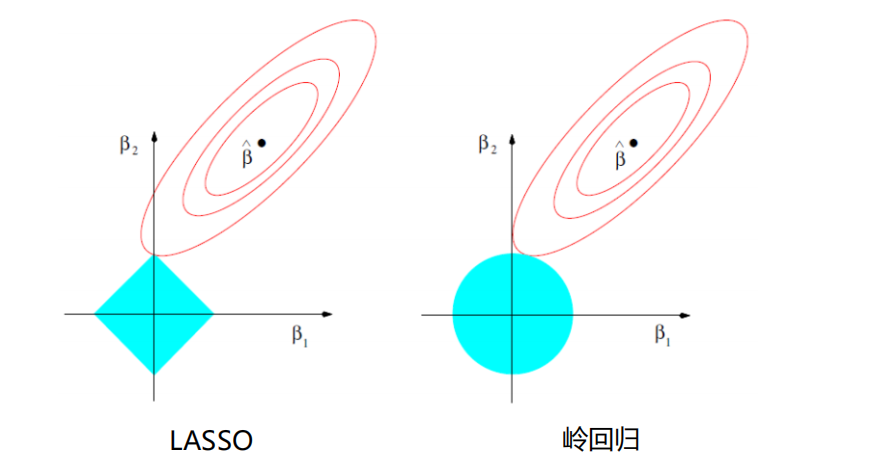

LASSO与岭回归

-

图像比较

-

sklearn-代码实现Lasso

|

|

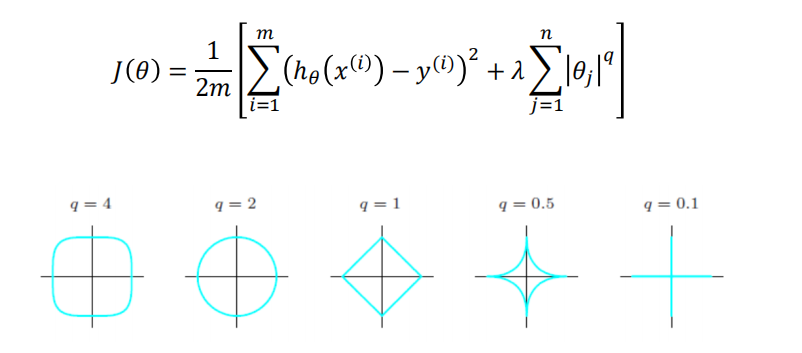

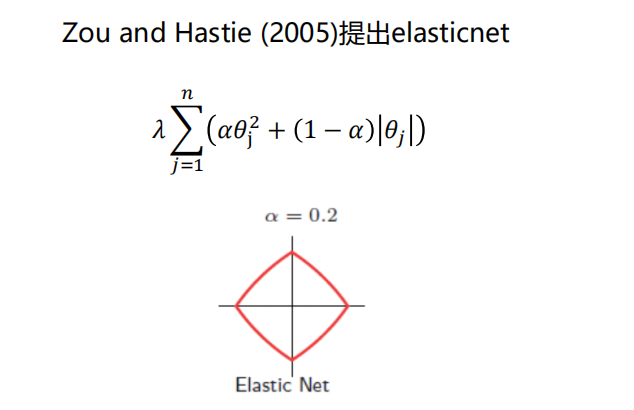

弹性网(Elastic Net)

很容易看出,q=2时就是为岭回归,q=1为Lasso回归

sklearn-代码实现ElasticNet

由于部分结果与上面类似,这里就不贴出来了

|

|